Kinetica is one of the fastest distributed analytics databases in the world. Its vectorized compute engine can perform complex analytical tasks at scale in real-time. But speed and performance are useless if you don’t get the basics of a distributed system right.

Distributed databases introduce extra challenges

A database system is ‘distributed’ if its components are located on different computers connected via a network. They work by spreading the data and analytical load across several servers rather than a single, comparatively large one.

This model has numerous advantages over centralized databases. Distributed databases can handle more concurrent tasks, at lower cost, with higher availability and resilience. Because of these advantages, most modern enterprises rely either partially or fully on distributed databases for their operational and analytical needs.

But distributed databases bring new challenges. The first challenge has to do with complexity and the second with performance.

No matter how powerful your system, it will grind to a halt if you don’t design your database to address these performance and complexity constraints. You won’t unlock the true value of your data – particularly as it starts to scale.

Complexity issues

Distributing data across different computers introduces complexity, as clients access and query data from multiple locations instead of from a single centralized location.

The first generation of distributed databases like Hadoop tackled this challenge with the MapReduce paradigm. With MapReduce, queries are mapped to different nodes in a system where they are applied to their portion of the data. The results from each node are then reduced to arrive at the final output.

MapReduce however, is not as intuitive or easy to use as the SQL-based declarative syntax that predated it. This makes it harder to work with, particularly for non-developers. As a result, data analysts without a software engineering background often fail to get the most out of distributed big data systems.

Performance issues

A performance bottleneck that plagues all types of databases – distributed or otherwise – is the movement of data to and from a hard disk. Reading or writing data using a hard disk is much slower than system memory (RAM), and shuffling data between these two components will negatively impact performance.

Moving data between nodes is a form of data transfer unique to distributed systems. Queries that need to bring together data from different nodes in the system need to move that data over the network. This causes the biggest performance bottleneck by far in a distributed system and is around 20 times slower than moving data from a hard disk.

Now, let’s see how Kinetica’s design features address these challenges.

Unlock the true value of your data with distributed SQL

Kinetica is a SQL database. Over the last decade, we’ve built an integrated, SQL-based library of geospatial, graph, time series, and OLAP functions which can deliver blistering performance at scale. Kinetica also comes with out-of-the-box connectors to load and export data from different tools, and features AI and machine learning capabilities.

-- Create a graph of a road network

CREATE OR REPLACE DIRECTED GRAPH mm_lakes

(

EDGES => INPUT_TABLE(

SELECT

shape AS WKTLINE,

direction AS DIRECTION,

time AS WEIGHT_VALUESPECIFIED

FROM mm_lakes_shape

),

OPTIONS => KV_PAIRS(

graph_table = 'mm_lakes_graph_table'

)

);

What’s more, you can access this powerful functionality using SQL – allowing you to build and deploy complex analytical pipelines that hook on to streaming and historical data sources, execute advanced queries, and obtain real-time results.

A tiered, memory-first architecture

A key pain point for all databases, distributed or otherwise, is the movement of data to and from a hard disk to the RAM.

It would be wonderful if we could store all our data in memory so we’d never have to read or write to a hard disk. But unfortunately, RAM is far more expensive than disk, and storing all your operational data in system memory is typically not an option.

So how do you get the most out of a limited resource? The answer is straightforward – you use a database that can prioritize its use.

Kinetica’s tiered storage and resource management features prioritize the use of VRAM and RAM tiers for more frequently used, ‘hot’ and ‘warm’ data.

Users can specify tier strategies that set eviction priorities for different data objects. Whenever the space in a tier crosses certain thresholds (high water mark), Kinetica will begin an eviction process, starting with lower priority data objects, until the space utilization reaches an acceptable level (low water mark).

By moving less-utilized data to lower, disk-based tiers, Kinetica maximizes the space for more frequently-used data in the higher RAM and VRAM tiers. This makes it less likely you’ll have to import data from the hard disk to the RAM, thereby avoiding that performance overhead.

Distributing data intelligently

The biggest bottleneck in a distributed system is caused by the movement of data between nodes. There are two ways Kinetica addresses these constraints – sharding and replication.

Sharding

Sharding is a scheme for distributing data across different nodes. This can be done either randomly or based on the values of a particular column.

How you choose to shard (distribute) your data (and the consequent performance implications) will depend on the types of queries you want to execute. A well-thought-out sharding scheme can reduce the need to move data from one node to another by keeping data that are likely to be used together on the same node.

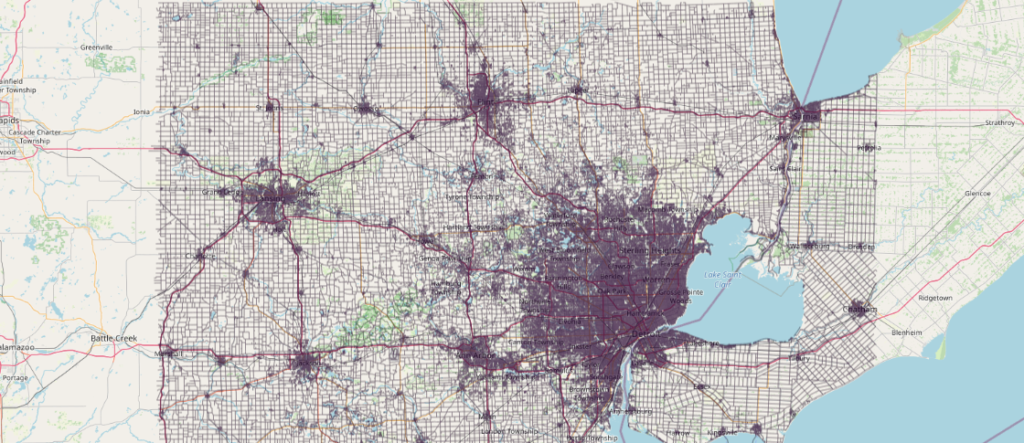

For instance, let’s say we have two tables – one for sales and another for inventory for each product in each store for a retail company. The company has a total of 4 stores (A, B, C and D) and 100 products (numbered 1 to 100).

Most of the queries that the company wants to run involve information for each product in a store. For example, a typical query might seek to find the relationship between the average sales and inventory level for a particular product in a particular store.

In such a scenario, sharding the sales and inventory tables based on store and product IDs will ensure that the data for each combination of product and store are co-located on the same node. As a result, any query that needs to combine sales and inventory information for a specific combination of store and product will have all the rows it needs on the same node, i.e, all the data required for answering whether the sales for product no. 10 in store A impacts the inventory level for product no. 10 in store A, will be located on the same node.

Distributed databases by their nature require us to split the data across different nodes. But sharding allows us some degree of control over this distribution so that we can minimize the need to shuffle large amounts of data between nodes in a system to complete a query.

Replication

Sometimes it makes sense to replicate the smaller table across all the nodes instead of distributing the rows between them. Replication is the copying of the same table across multiple nodes in a system. It is typically done for smaller tables to avoid having to use up a lot of storage space. Let’s say we have a table with information on each of the 4 stores A, B, C and D – things like the average number of employees, location, and annual revenue etc. Replicating this table will provide access to it on all nodes. So any queries that require data from this table won’t have to move this data from other nodes in the cluster.

Try now for free

We’ve barely https://www.kinetica.com/try/ scratched the surface regarding the design features that make it possible to get the most out of Kinetica’s vectorized compute engine. But this should serve as a bird’s-eye view of some of the key design features that every distributed database needs to get right to offer exceptional performance.

You can read more about these features and how to use them on Kinetica’s documentation website. Also, don’t forget to try out our free developer edition. It takes just a few minutes to set up and get started.