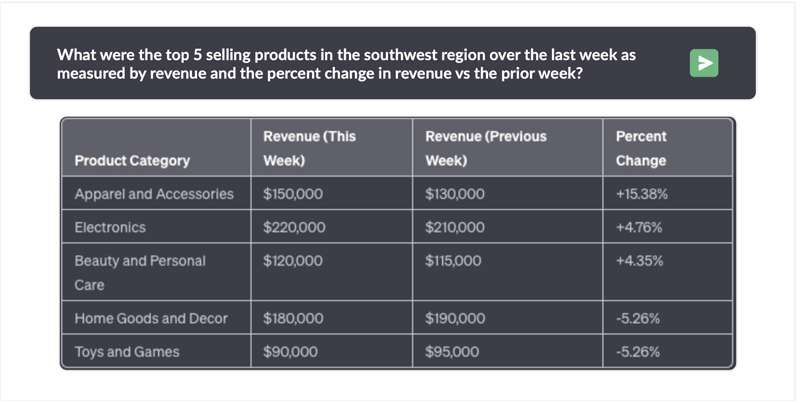

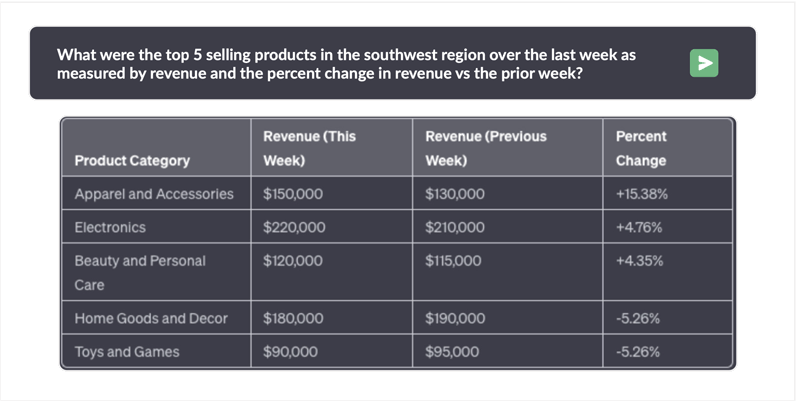

The desire to query enterprise data using natural language has been a long-standing aspiration. Type a question, get an answer from your own data.

Numerous vendors have pledged this functionality, only to disappoint in terms of performance, accuracy, and smooth integration with current systems. Over-hyped solutions turned out to be painfully slow, causing frustration among users who expected conversational interactions with their data. To overcome performance issues, many vendors demo questions known in advance, which is antithetical to the free form agility enabled by generative ai.

Accuracy issues have been just as vexing, with wild SQL hallucinations producing bizarre results or syntax errors leading to answers that are completely incorrect. Enterprises and government agencies are explicitly banning the use of public LLMs like OpenAI that expose their data. And when it comes to integration, cumbersome processes introduce significant complexity and security risks.

Kinetica has achieved a remarkable feat by fine-tuning a native Large Language Model (LLM) to be fully aware of Kinetica’s syntax and the conventional industry Data Definition Languages (DDLs). By integrating these two realms of syntax, Kinetica’s SQL-GPT functionality has a deep understanding of the nuances specific to Kinetica’s ecosystem, as well as the broader context of how data structures are defined in the industry.

Accuracy

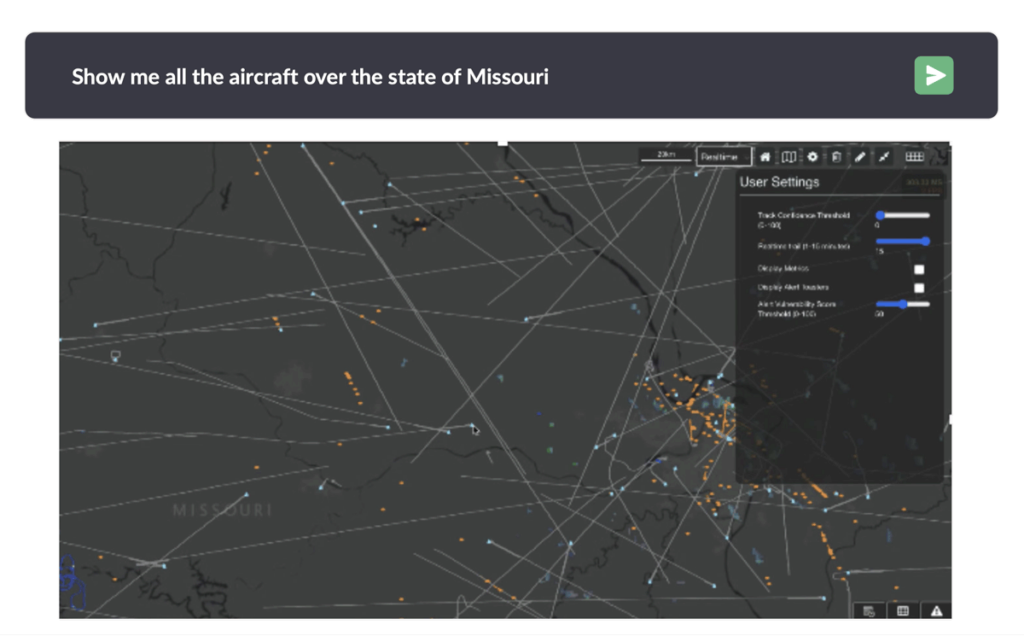

Kinetica’s model’s unique advantage lies in its training on proprietary syntax, enabling it to harness specialized analytic functions such as time-series, graph, geospatial, and vector search. This means that we can readily address complex queries such as “Show me all the aircraft over Missouri.” To execute this query, our model performs a sophisticated geospatial join, uniting the shape of a state with the precise locations of aircraft. Subsequently, it facilitates the visualization of these results on a map. This capability underscores our ability to handle intricate tasks beyond standard ANSI SQL efficiently, offering users valuable insights and visualizations for enhanced decision-making.

With Kinetica’s native LLM for SQL creation, we ensure unwavering consistency. While responses from OpenAI and other public LLMs may have previously functioned, they can unpredictably cease to work without clear explanations. In contrast, Kinetica provides a reliable guarantee that the responses we generate will remain functional over time, offering businesses and users the peace of mind that their SQL queries will consistently deliver the desired results.

In our approach to inferencing, we prioritize the optimization of SQL generation. OpenAI and other public LLMs are primarily tuned for creativity, often employing sampling for inferencing, which can lead to diverse but less predictable responses. To enhance the consistency of our SQL generation, we disable sampling. Instead, our model employs a more robust “beam search” technique, which meticulously explores multiple potential paths before selecting the most suitable response. This deliberate approach ensures that our SQL queries are not only consistent but also effectively tailored to the specific task at hand, delivering accurate and optimized results.

Performance

Vectorization and the utilization of powerful Graphics Processing Units (GPUs) play a pivotal role in the unparalleled performance achieved by Kinetica’s SQL-GPT. By harnessing the principles of vectorization, which involves performing operations on multiple data elements simultaneously, SQL-GPT can process and analyze data at an extraordinary speed. This compute paradigm allows Kinetica to quickly tackle complex SQL queries with multi-way joins, which is often required for answering novel questions. As a result, Kinetica SQL-GPT becomes an invaluable tool in data analysis, providing rapid insights and solutions to even the most intricate analytical challenges. Kinetica crushes the competition on independently verified TPC- DS benchmarks.

The speed achieved through GPU acceleration and vectorization in Kinetica is transformative in its implications. One of the key benefits is that it eliminates the need for extensive data pre-engineering to answer anticipated questions. Traditional databases often rely on indexes, denormalization, summaries, and materialized views to optimize query performance, but with Kinetica’s capabilities, these steps become unnecessary. The true allure of generative AI technologies lies in their capacity to enable users to explore uncharted territory by asking entirely new questions that might not have been considered before. This freedom to inquire and discover without the constraints of pre-engineering is a game-changer in the world of data analytics and insights, offering unprecedented opportunities for innovation and exploration.

Security

One of Kinetica SQL-GPT’s most compelling advantages is the ability to safeguard customer data. We provide the option for inferencing to take place within our organization or on the customer’s premises or cloud perimeter. This feature sets us apart, particularly in the context of large organizations where data security is paramount. Many such entities have implemented stringent security policies that have led to the prohibition of public LLM usage. Unlike with public LLMs, no external API call

is required, and data never leaves the customer’s environment. By offering a secure environment for inferencing, we empower organizations to harness the benefits of generative AI without compromising their data integrity or falling afoul of their security protocols, making us a trusted and preferred choice in the realm of AI solutions.

Smooth Integration

Kinetica’s data management strategies are designed to minimize data movement and maximize flexibility. One approach it employs is the use of external tables, which results in zero data movement. When a query is executed, it is pushed down directly into the database where the data resides, eliminating the need to transfer large datasets, thereby enhancing query speed and efficiency. Additionally, organizations can further optimize performance by caching data through Kinetica’s change data capture (CDC), a technique that boosts processing speed while removing complexity and minimizing data movement. Kinetica offers the convenience of pre-built connectors for a vast array of data sources, including Snowflake, Databricks, Salesforce, Kafka, BigQuery, Redshift, and many others.

Kinetica’s easy-to-use API for SQL-GPT makes it easy to build LLM apps. This API simplifies and streamlines the development of AI-driven solutions on internal enterprise data. Simply pass your question in natural language to the API and get the resulting answer back.

Give analysts and SQL laymen what they’ve always wanted: a platform to get answers at the speed of thought. Try with SQL-GPT today at www.kinetica.com/try.