Every so often, there emerges a technological breakthrough that fundamentally shifts the way we interact with the world—transforming mere data points into a narrative that elevates human experience.

For decades, the realm of big data analytics has been a daunting landscape for most lay business users, navigable only by a team of specialists, comprising analysts, engineers and developers. They have been the gatekeepers. Setting up analytical queries and crafting data pipelines capable of wrestling out insights from immense volumes of data. Yet, the advent of Large Language Models (LLMs) has started to democratize this space, extending an invitation to a broader spectrum of users who lack these specialized skills.

At Kinetica, we’re not just building a database—we’re scripting the lexicon for an entirely new dialect of big data analytics. A dialect empowered by Large Language Models (LLM), designed to catalyze transformative digital dialogues with your data—regardless of its size.

In this article, I’ll unpack the salient features that make our approach unique and transformative.

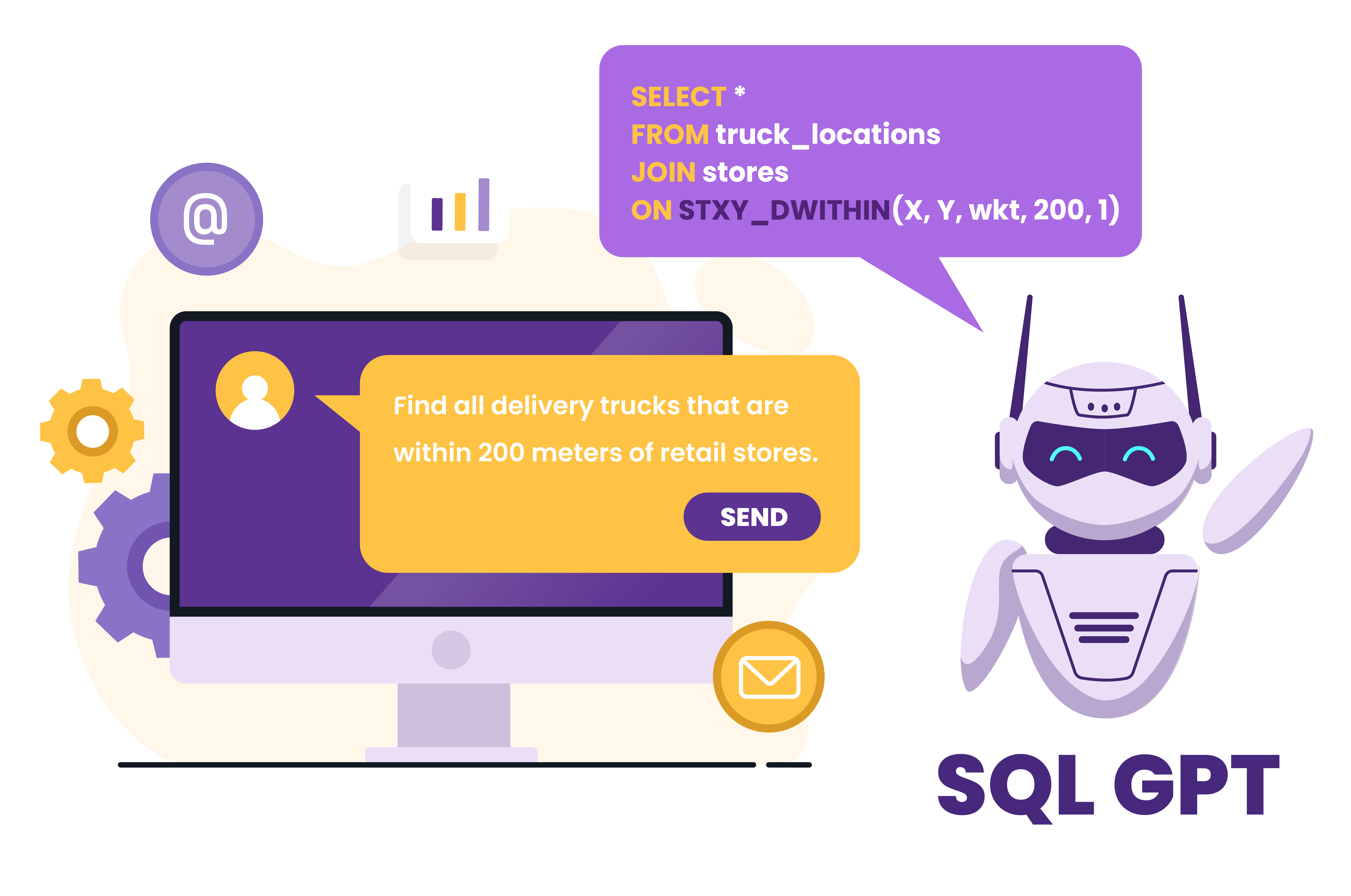

A pioneering approach to the Language-to-SQL paradigm

There are several databases that have implemented a Language-to-SQL feature that takes a prompt written in natural language and converts it to SQL. But these implementations are not deeply integrated with the core database and they require the user to manage the interaction with the LLM.

We have taken a different approach at Kinetica. An approach that is rooted in our belief that the future is one where the primary mode for analyzing big data will be conversational – powered by LLMs and an engine that can execute ad hoc queries on massive amounts of data. This requires that the SQL generated by the LLM is tightly coupled to the database objects that exist in the user’s environment without compromising security.

To realize this vision, we have baked constructs directly into our database engine that allow users to interact more natively with an LLM. Through our SQL API users can effortlessly define context objects, provide few-shot training samples and execute LLM output directly.

-- A template for specifying context objects

CREATE CONTEXT [<schema name>.]<context name>

(

-- table definition clause template

(

TABLE = <table name>

[COMMENT = '<table comment>']

-- column annotations

[COMMENTS = (

<column name> = '<column comment>',

...

<column name> = '<column comment>'

)

]

-- rules and guidelines

[RULES = (

'<rule 1>',

...

'<rule n>'

)

]

),

<table definition clause 2>,

...

<table definition clause n>,

-- few shot training samples

SAMPLES = (

'<question>' = '<SQL answer>',

...

'<question>' = '<SQL answer>'

)

)

-- A template for a generate SQL request

GENERATE SQL FOR '<question>'

WITH OPTIONS (

context_name = '<context object>',

[ai_api_provider = '<sqlgpt|sqlassist>',]

[ai_api_url = '<API URL>']

);A user can specify multiple cascading context objects that provide the LLM the referential context it needs to generate accurate and executable SQL. Context objects are first class citizens in our database, right beside tables and data sources. They function as a managerial layer between the user and the LLM, optimizing query results and performance. The existence of context objects creates a simplified operational workflow that ensures both speed and accuracy.

Hosted and On-Prem LLM services: Your choice, our delivery

At Kinetica, we believe that our users should have the freedom to choose. While we offer a private on-prem LLM service that is fine tuned to generate queries that use Kinetica functions, we have decoupled the LLM entirely from the database. And developers can use a standard SQL interface with any LLM they want. This framework allows swapping LLMs based on your preference. This flexibility empowers businesses to select the service model that aligns with their requirements. You can therefore use Kinetica’s native LLM service or opt for a third party LLM like the GPT models from OpenAI.

That being said, to generate accurate and executable SQL queries, we still need to provide additional guidelines to an LLM to reduce its tendency to hallucinate. Using our SQL API developers can specify rules and guidelines that are sent to the LLM as part of the context. This helps the LLM generate queries that are performant and use the Kinetica variant of SQL where necessary.

Enterprise grade security

Kinetica is an enterprise grade database that takes data security and privacy seriously. A user interacting with an LLM can glean information using the context. We have therefore made context objects permissioned, giving database administrators full control over who gets to access and use context objects.

However, if you are using an externally hosted service like OpenAI, your context data might still be vulnerable. Customers who need an additional layer of security can use our on-prem native LLM service to address this concern. With Kinetica LLM your data and context will never leave your database cluster and you still get the full benefit of using an LLM that powers a conversation with your data.

Performance: It’s Not Just About Accurate SQL

Conversations require speed. You cannot have a smooth analytical conversation with your data if it takes the engine minutes or hours to return the answer to your question. This is our competitive edge. Kinetica is a fully vectorized database that can return queries in seconds. Queries that other databases can’t even execute.

Apart from the obvious benefit of keeping conversation flowing by returning ad hoc queries generated by an LLM really fast. Speed also has a less obvious benefit – it helps to improve the accuracy of the SQL generated by the LLM.

Because we are really fast, we can maintain logical views that capture joins across large tables or other complex analytical operations easily. This allows ‘context’ objects to feed semantically simplified yet equivalent representations of data to the LLM. This approach ensures that even the most intricate data models are interpreted accurately, thereby boosting the reliability of query results.

A database you can build on

Kinetica is a database for developers. Our vision is to enable conversations with your data – no matter the scale. We want developers to build the next generation of applications that harness the amazing potential of generative AI and the raw speed and performance of Kinetica and help us realize this vision.

Our native APIs cover – C++, C#, Java, JavaScript, Node.js, Python, and REST. You can use these to build tools that help users analyze data at scale using just natural language.

Conclusion

The future of conversational analytics rests on two pillars: the capability to convert natural language into precise SQL and the speed at which these ad-hoc queries can be executed. Kinetica addresses both, setting the stage for truly democratized big data analytics, where anyone can participate.

Experience this right now with Kinetica.